Johannes Ackermann

I am a fourth-year PhD student at the University of Tokyo, working on Reinforcement Learning supervised by Masashi Sugiyama, and a part-time researcher at RIKEN AIP.

I previously interned at Sakana AI and at Preferred Networks. Prior to starting my PhD, I worked on applied ML for Optical Communication at Huawei, obtained a B.Sc. and M.Sc. in Electrical Engineering and Information Technology from the Technical University of Munich and wrote my Master’s Thesis at ETH Zurich’s Disco Group.

I am particularly interested in the nature of “tasks” in RL, here defined as the combination of transition and reward function:

-

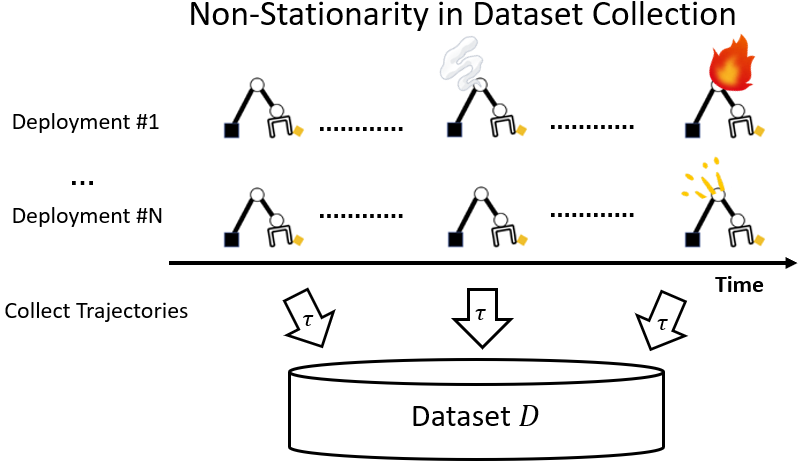

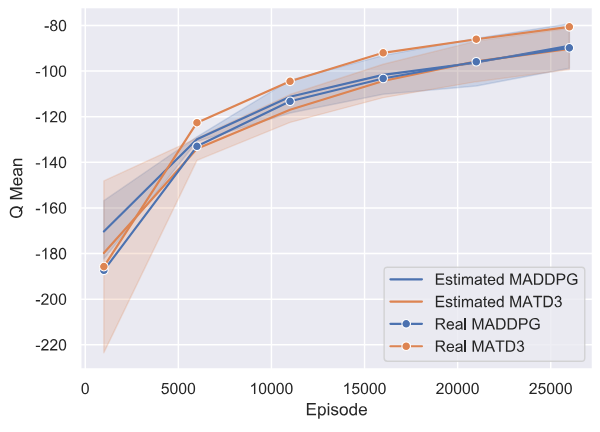

Changing Tasks: How can we deal with changing tasks during dataset collection for Offline RL [RLC1] or changing dynamics shift during deployment [RLC2]?

-

Structure of Tasks: In Multi-Task RL, all tasks are usually treated as equally (dis)similar. I investigated how to identify and use task relations, by learning continuous task spaces [Thesis, Chapter 3] and task clusterings [ECML-PKDD1].

-

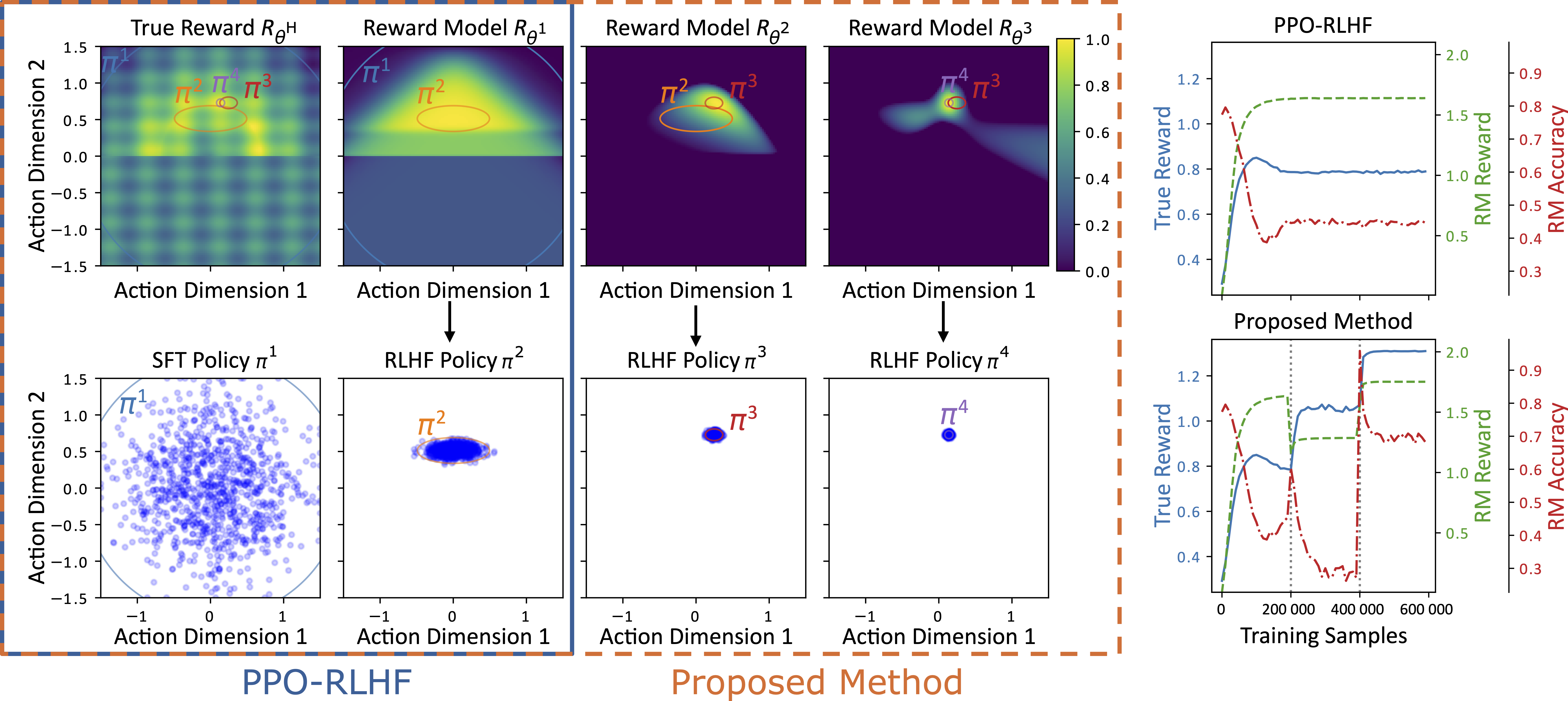

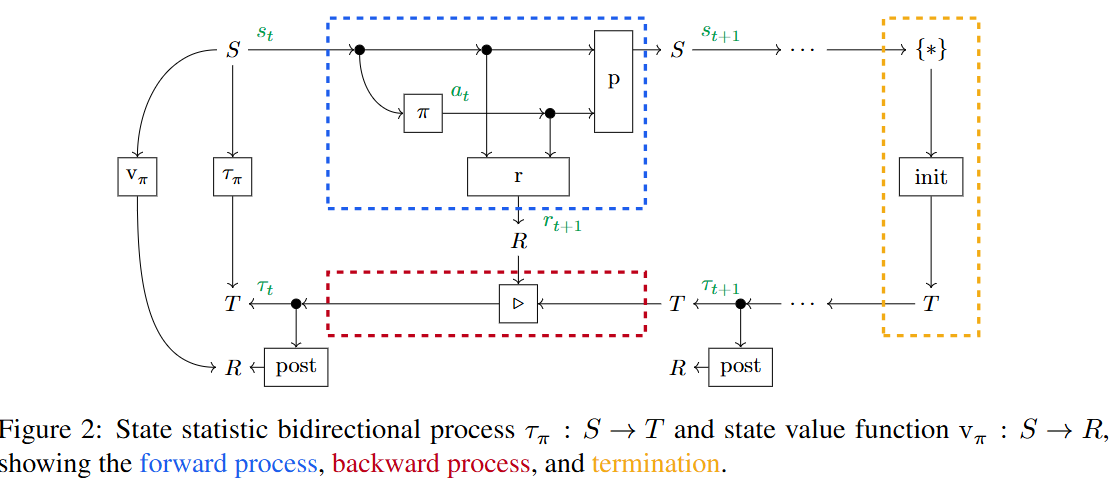

Task Specification: We showed that reward models learned from human preferences (RLHF) need off-policy corrections [COLM1]. I also (co-)investigated different ways to accumulate rewards, beyond the simple discounted sum [RLC3], such as range, min, max, variance, etc.

I’m always happy to chat about research, so feel free to reach out by e-mail or socials!

news

| Jul 08, 2025 | Off-Policy Corrected Reward Modeling for RLHF has been accepted at COLM 2025 |

|---|---|

| May 10, 2025 | Two papers 1, 2 accepted at RLC 2025 |

| May 17, 2024 | Our work on Offline Reinforcement Learning from Datasets with Structured Non-Stationarity was accepted at RLC 2024 |

latest posts

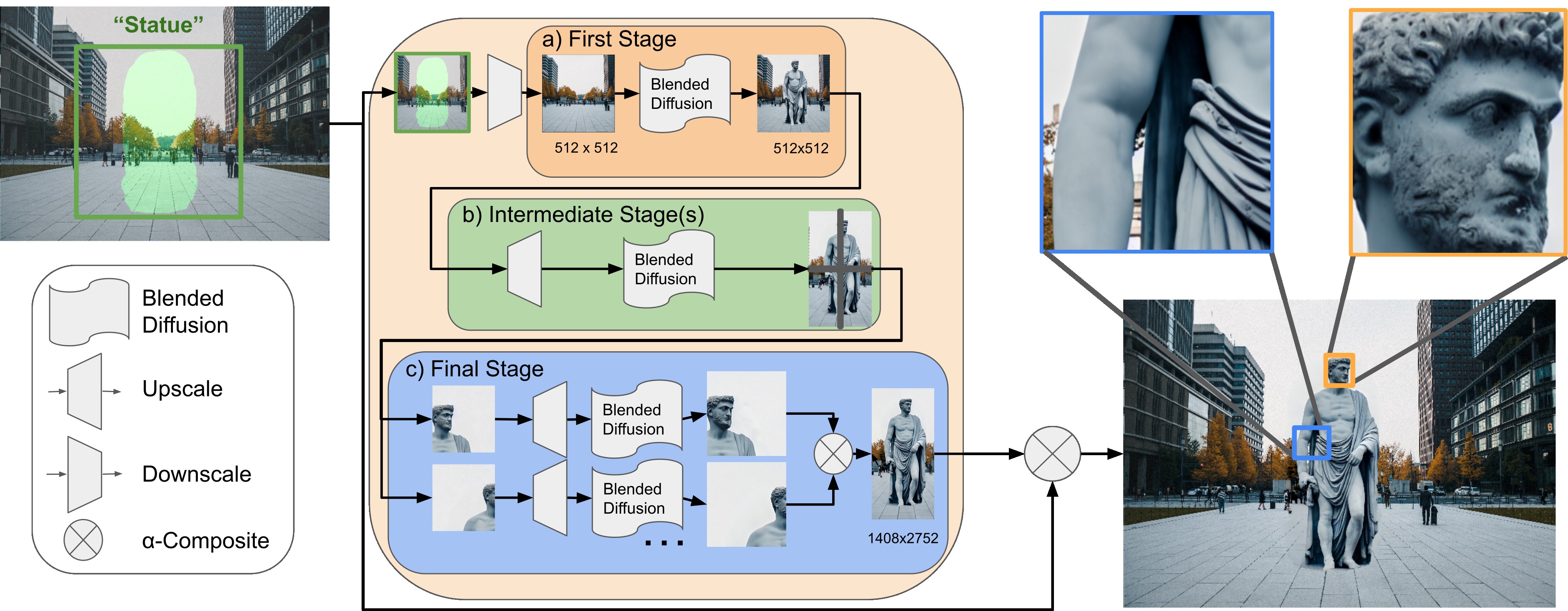

| Apr 20, 2022 | Building a Text to Image Web App |

|---|